BUENOS AIRES—“It is difficult to make predictions, especially about the future.” This quote, often attributed to Nobel Prize-winning physicist Niels Bohr, has never been more valid than when applied to the ongoing debate about AI and labor markets.

A few years ago, the consensus was clear. Automation would likely replace medium-skilled jobs, depress low-skilled wages due to competition from displaced middle-skilled workers, and widen the skill premium. This trend was exacerbated by the decline of jobs that require personal contact and the surge in remote work and e-commerce during the pandemic. As a result, low- and middle-income countries, including those in Latin America, with less qualified workforces, were considered the most vulnerable to technological substitution—where vulnerability was measured as the probability of an occupation or a task being automated.

Education, especially higher and lifelong learning, was seen as the key to adapting to automation. As recently as May 2023, experts believed that employment in high-income economies would remain stable or even increase, as automation would create as many jobs as it would destroy.

But fast forward to today, and these assumptions no longer hold. In fact, the most likely outcomes may be the opposite—with big consequences for Latin American economies.

Firstly, generative AI is de-skilling: It replaces knowledge and skills. By undermining the advantages of education and seniority, it narrows the skill premium—a “Robin Hood effect” that could equalize wages downward while further reducing labor’s share of income. Although the notion that coders are the new blue collar workers is not new, generative AI shows that education alone cannot compete with advancing technology in a much broader sense. Moreover, it questions the estimates of exposure to automation: by the time we achieve Artificial General Intelligence (AGI), which experts predict could happen within 20 years, no activity will be immune to technological substitution. Consequently, it’s high-income countries with a large human capital stock to be lost to automation that are now the most exposed to technological substitution in the foreseeable future.

The human factor

But there’s more to consider. Critically, we have to distinguish between potential substitution, driven by technological capabilities, and actual substitution, influenced by limits imposed by users and consumers.

As we argue in a new book, the frontier of substitution will likely not be technological. While on the supply side of the automation market there may be no technological restrictions to replacing human work, cultural, social, legal, or moral constraints on the demand side will play a critical role.

For example, in 2016, researchers launched The Moral Machine, a platform intended to “gather the human perspective on moral decisions made by machine intelligence.” One scenario presented the trolley problem from the perspective of an autonomous car: How should a self-driving car react to a life-or-death decision? Besides sparking fascinating moral discussions, these exercises highlighted a legal issue: While we might accept a human’s imperfect reaction to an accident, an autonomous car’s decision would hold the manufacturer liable—a potential obstacle to the widespread adoption of fully self-driving cars, despite their technical readiness.

Another example is the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), an algorithm used by judges in many U.S. states to assess recidivism risk, which has been shown to replicate human biases, including racism. While a judge’s profiling may be criticized, a biased algorithm poses a significant legal and economic liability, potentially deterring its implementation.

AI engines’ regurgitation of copyrighted material also presents issues, highlighted in the New York Times lawsuit against ChatGPT’s owner, OpenAI. OpenAI argued that using human-written pieces, copyrighted or not, was essential for developing Large Language Models (LLMs) like GPT. And they will always be essential: how else would LLMs stay abreast of linguistic, aesthetic, cultural, social and moral changes?

There is also the “aura” surrounding creators and artisans, which may protect their work from AI competition. While AI could compose music indistinguishable from human compositions, people may not prefer artificial music or attend concerts without human performers (imagine a night at the Met with lyrical robots, or a Kraftwerk concert where the four members of the group leave the stage to the computers). AI might produce films based on actors’ resemblances, but audiences would still cherish the real thing and resist technological intrusion (check out the complains about a marginal use of AI in the recent movie for a hint). This concept extends beyond art to many current occupations: consumers may still choose a human cook over a robot chef, or “flawed” handcrafted items over immaculate AI décor.

Lastly, there is the shock of the unnatural. There is something eerie about a robot teacher or a disembodied therapist, related to the the uncanny valley theory, which postulates a non-monotonic relationship between the resemblance of an object (or an image) to a human being and our emotional response to it. Think of Yul Brynner’s terrifying cyborg cowboy in the original Westworld, or the artificially young Harrison Ford in the latest Indiana Jones. With all this in mind, it becomes clear that technology alone will not dictate technological substitution. The technologist’s timeframe may be an underestimate, due to these human elements. (And this doesn’t even touch on concerns about disinformation and cybersecurity central to the current calls for AI regulation and pauses.)

Mitigation, adaptation and preparedness

Comparing the technological revolution to climate change helps us broaden the AI debate. On climate change, as the world keeps running behind its climate goals, the focus is increasingly on adaptation, though mitigation remains essential. For AI, despite the well-grounded belief that technology cannot go unchecked, in a multipolar world its advancement is inevitable—and adaptation is key.

Thinking about AI exposure should go beyond checklists of automatable tasks and emphasize preparedness. That should include education, labor market policies, tax and transfer arrangements that promote technologies that are more productive and complementary to human labor, and regulations (like a “made by humans” rating) that enhance the human factor and expand human occupations. We need tools to manage the volatile transition towards a future where traditional work may decline and a new income distribution architecture will be needed.

Where does all that leave Latin America?

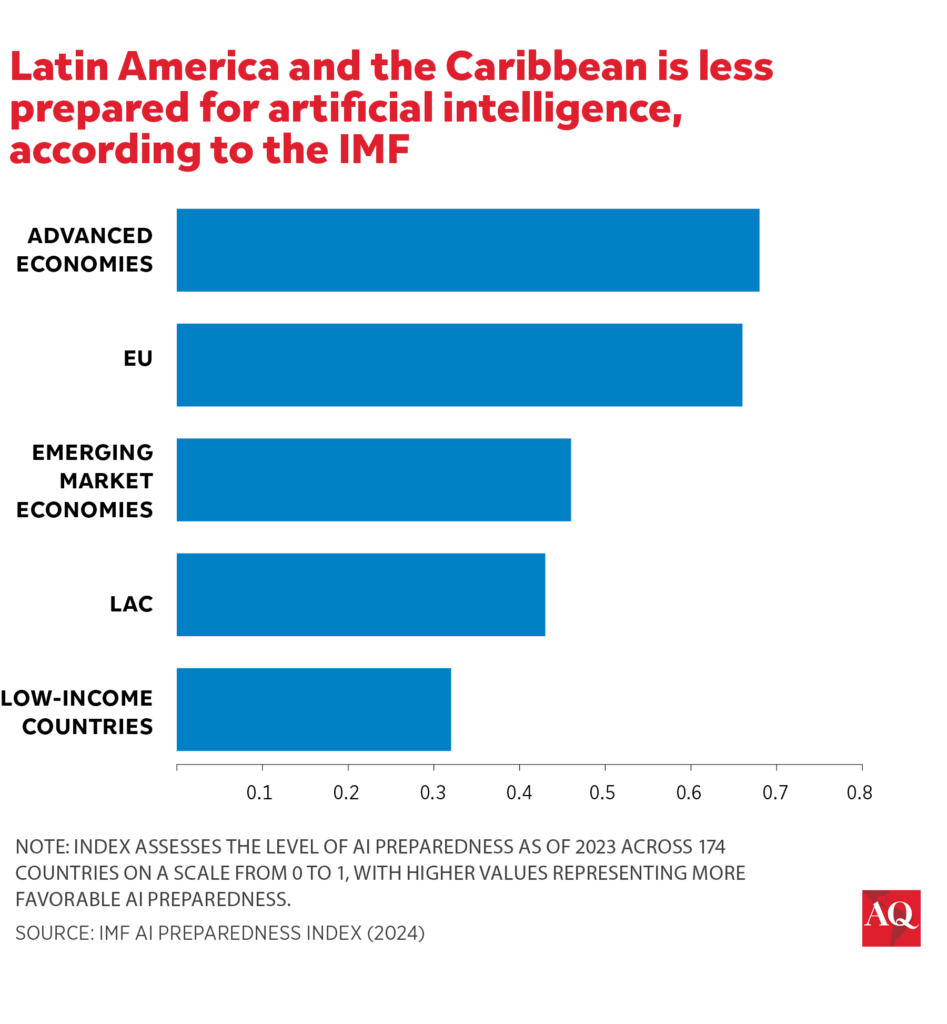

This complicated outlook is on display in the recent IMF report on AI. While, as noted, Latin America appears less vulnerable to AI substitution, according to its AI Preparedness Index, the region is also less prepared for AI. The balance between these two metrics is hard to quantify.

Clear factors driving the region’s unpreparedness include rigid and outdated education systems, below-average math and reading scores, scarce and unequal digital connectivity, and limited vocational training and reskilling programs due to widespread precarity and informality.

On the upside, cultural resistance to automation may be stronger. It’s easier to imagine robot nannies or AI therapists in Japan or China than in a traditional Latin American country. One thing is certain: Current measures of exposure do not fully capture the non-technological factors that will be critical in determining AI substitution in the future.