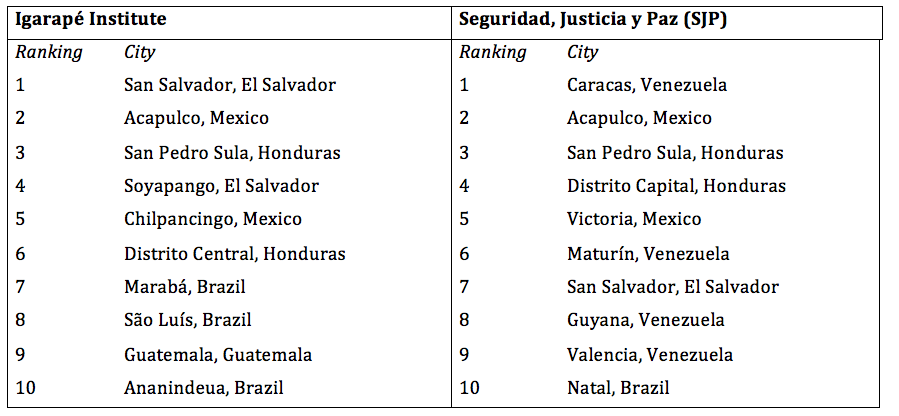

Another year, another ranking of the world’s most murderous cities. The latest study released by the Mexican think tank Seguridad, Justicia y Paz (SJP) suggests that Caracas took the top spot in 2016. Earlier this year, the Igarapé Institute and The Economist released a table tipping San Salvador as number one.

So which is it?

Both, or neither – depends on how you count. The truth behind headline-grabbing superlatives is that counting the dead is an inexact science. Debate is ongoing over how and to what purpose we classify the planet’s most dangerous cities. Despite being home to 43 of the world’s 50 most dangerous cities (according to most counts, including those of both SJP and Igarapé) our understanding of violence in Latin America remains incomplete. That’s precisely why more research, more information and, yes, more lists are so vital to understanding and curbing violence and homicide, in the region and beyond.

The challenge of accurately comparing violence across cities is clear from taking a closer look at the differences between SJP and Igarapé Institute data.

Click here to see the full lists

Click here to see the full lists

Decisions about how and where to include cities on a given list depend on the information available, how it is collected, and how it is interpreted. These decisions can have profound effects on policy. Cities featuring prominently at the top of these lists may be downgraded by financial services companies and avoided by tourists. When cities are excluded from (or fall off) the ranking, politicians may avoid prioritizing and investing in homicide prevention and reduction.

This does not mean that listing and comparing violence across borders should be avoided – quite the contrary. But it does mean that anyone hoping to draw conclusions from a given list should understand where those differences in measurement reside, and what they mean.

The first big difference between the SJP and Igarapé Institute lists comes down to how cities are defined. It turns out that there is no internationally agreed definition of what constitutes a city, so this is perhaps not surprising. The way a city is defined influences how homicide rates are determined. For example, the SJP may combine multiple municipalities into a single sprawling city. An example is their classification of Cali, which using the SJP definition includes Yumbo, a city 21 kilometers away. Meanwhile, the Igarapé Institute includes cities such as Ananinadeua (Brazil), Soyapango (El Salvador) and Villa Nueva (Guatemala), which are excluded from the SJP list.

The second major discrepancy relates to the underlying data on homicide. There are many sources of information on murder, ranging from public health and crime statistics to media articles, human rights reports, and scientific estimations. The SJP, for its part, adopts a flexible approach to data, drawing on government, non-governmental, academic and journalistic sources. The Igarapé Institute, by contrast, limits its information to a tighter selection of authoritative sources. As a result, it excludes some data that cannot be verified.

Not surprisingly, decisions about the kinds of homicide data used can dramatically affect which cities are included. The biggest example of this is Venezuela, which SJP includes and Igarapé Institute excludes (though writes about separately). The Venezuelan authorities sporadically release crime statistics, and these are of varying quality. Local research organizations also generate estimates based on very small samples.

The third challenge relates to data availability more generally. Comparative information on cities is already uneven, even more so in the case of homicidal violence. Even in information-rich environments such as North America and Europe, it is difficult to generate reliable data on murder over time at the municipal and metropolitan scale. The challenges are orders of magnitude higher elsewhere in the world. As such, there are inevitably holes in our knowledge.

Yet the secret to reducing homicide is ensuring that interventions are data-driven and based on solid evidence of what works. This insight has long been known by policymakers like former Bogotá mayor Antanas Mockus, the former mayor of Medellín Sergio Fajardo, or two-time Cali mayor Rodrigo Guerrero. The revolution in data processing and machine learning tools has given rise to an array of digital platforms to monitor criminal violence in real time and identify the best ways to prevent and disrupt it.

There is nothing wrong with multiple platforms to rank homicide in cities. Indeed, this is to be encouraged since it generates opportunities to triangulate and verify data and sources. But it’s also critical that organizations are clear about how the unit of analysis is defined, how their data is collected and the criterion for exclusion and inclusion. Failure to do so not only misleads the media, but it also can generate misguided policy prescriptions on the ground.

The SJP and Igarapé Institute provide reasonably clear methodological notes, but there is always room for improvement.

This article series supports the Instinto de Vida (Instinct for Life) campaign, an effort by more than 20 civil society groups and international organizations to reduce homicide in seven Latin American countries by 50 percent over 10 years. Beginning in 2017, the campaign promotes annual homicide reductions of 7.5 percent in Brazil, Colombia, El Salvador, Guatemala, Honduras, Mexico and Venezuela – a reduction that would prevent the loss of 364,000 lives.

—